As India races to build its own Indic language models, OpenAI has introduced a new benchmark evaluation that, it says, not only tests a model’s linguistic ability but also its grasp of Indian cultural context across domains.

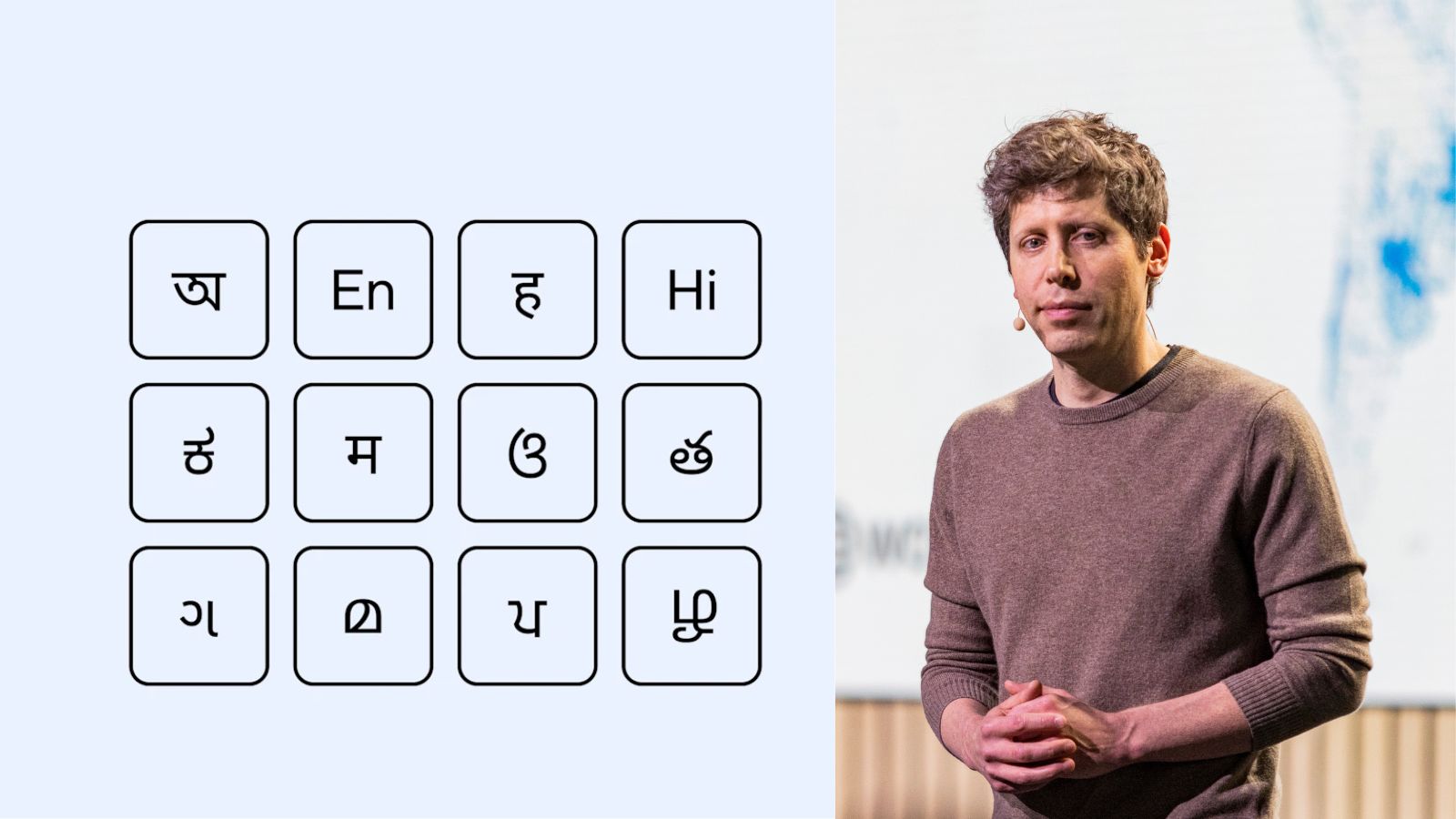

Known as IndQA, the benchmark test comprises 2,278 questions across 12 languages and 10 cultural domains, compiled in partnership with 261 experts from across India, OpenAI said in a blog post on Monday, November 3.

The questions span various topics such as Architecture & Design, Arts & Culture, Everyday Life, Food & Cuisine, History, Law & Ethics, Literature & Linguistics, Media & Entertainment, Religion & Spirituality, and Sports & Recreation. They are written natively in Bengali, English, Hindi, Hinglish, Kannada, Marathi, Odia, Telugu, Gujarati, Malayalam, Punjabi, and Tamil.

“We specifically added Hinglish given the prevalence of code-switching in conversations,” OpenAI said.

The AI startup’s focus on building a benchmark around Indian languages and cultures is significant given that India has emerged as the second-largest market for ChatGPT after the United States. On November 4, OpenAI hosted its DevDay Exchange developer conference in Bengaluru where it made several India-specific announcements. The company is also making its ChatGPT Go subscription plan free for one year to users in India who sign up during the limited promotional period.

“India has about a billion people who don’t use English as their primary language, 22 official languages (including at least seven with over 50 million speakers), and is ChatGPT’s second largest market,” OpenAI said. “While our aim is to create similar benchmarks for other languages and regions, India is an obvious starting point,” it added.

How the IndQA benchmark works

As part of the benchmark test, AI models are asked questions in the form of a culturally grounded prompt in an Indian language. Each question also comes with an English translation for auditability and an ideal answer that reflects expert expectations.

Story continues below this ad

The model’s response is graded against criteria written by domain experts for that specific question. This criteria spells out what an ideal answer should include or avoid, and each one is given a weighted point value based on its importance in a rubric-based approach.

At the end, an AI model grader checks whether each criterion is met and generates a final score by calculating the sum of the points for criteria satisfied divided by the total possible points.

To be sure, IndQA has not been designed as an LLM leaderboard that ranks models based on their scores. Additionally, a model’s cross-language scores cannot be used to state that it is, for instance, better at Kannada than Hindi. Instead, the scores are meant to measure improvement over time within a model family or configuration, as per OpenAI.

How it was designed to capture cultural nuance

The task of drafting difficult, reasoning‑focused questions tied to regional and cultural context was outsourced to experts in ten different domains, OpenAI said. This group of 261 experts comprised journalists, linguists, scholars, artists, and industry practitioners, including an award-winning Telugu actor, a Malayalam poet, a Punjabi music composer, and an international chess grandmaster, among others.

Story continues below this ad

In its next step, OpenAI filtered out questions by testing them against its own AI models such as GPT‑4o, o3, and GPT‑4.5. “We kept only those questions where a majority of these models failed to produce acceptable answers, preserving headroom for progress,” it said. Finally, experts added ideal answers and their English translations which was followed by peer review and iterative fixes.

Because the test questions were chosen based on where OpenAI’s own models struggled, the company said its models may be at a disadvantage compared to other models.

Can IndQA level the playing field for Indic LLMs?

Large language models (LLMs) built for Indic languages could serve as a differentiator from India in the global AI arms race. However, developing Indic LLMs faces two key challenges: the lack of high-quality datasets and the absence of local benchmarks to evaluate Indic LLMs.

For the past few years, the progress of AI models has primarily been tracked through a set of familiar, multilingual benchmarks such as MMMLU and MGSM. But these benchmarks have been criticised because they fail to capture an AI model’s understanding of local context, culture, history, and the things that matter to people where they live.

Story continues below this ad

Furthermore, existing language benchmarks are focused primarily on a model’s translation or multiple-choice tasks. Indian AI startups such as Sarvam have repeatedly identified the absence of standardised benchmarks for Indic languages as a major barrier to compete with global counterparts.

Since existing benchmarks are mainly focused on English and European languages, they could potentially hinder AI adoption in India where AI-powered speech recognition requires processing of multiple accents and mixing of English with local languages.

LLM leaderboards maintained by Western organisations have also been accused of bias. Recently, Gurugram-based Shunya Labs claimed that its speech model Pingala was not ranked at the top of Hugging Face’s OpenASR leaderboard despite scoring higher than Nvidia’s model.

“Our speech model, Pingala, posted breakthrough results with a 3.1% (word error rate) WER vs Nvidia’s 5.6%. By every metric, it should’ve gone straight to the top. Instead, it’s been stuck in a black box process where competitors hold the keys,” Ritu Mehrotra, co-founder and CEO of Shunya Labs, said in a post on LinkedIn.

Story continues below this ad

“This isn’t just frustrating — it’s a warning. If “open” AI can be gated by the same trillion-dollar players it claims to challenge, then who is the system really built for?” she added.