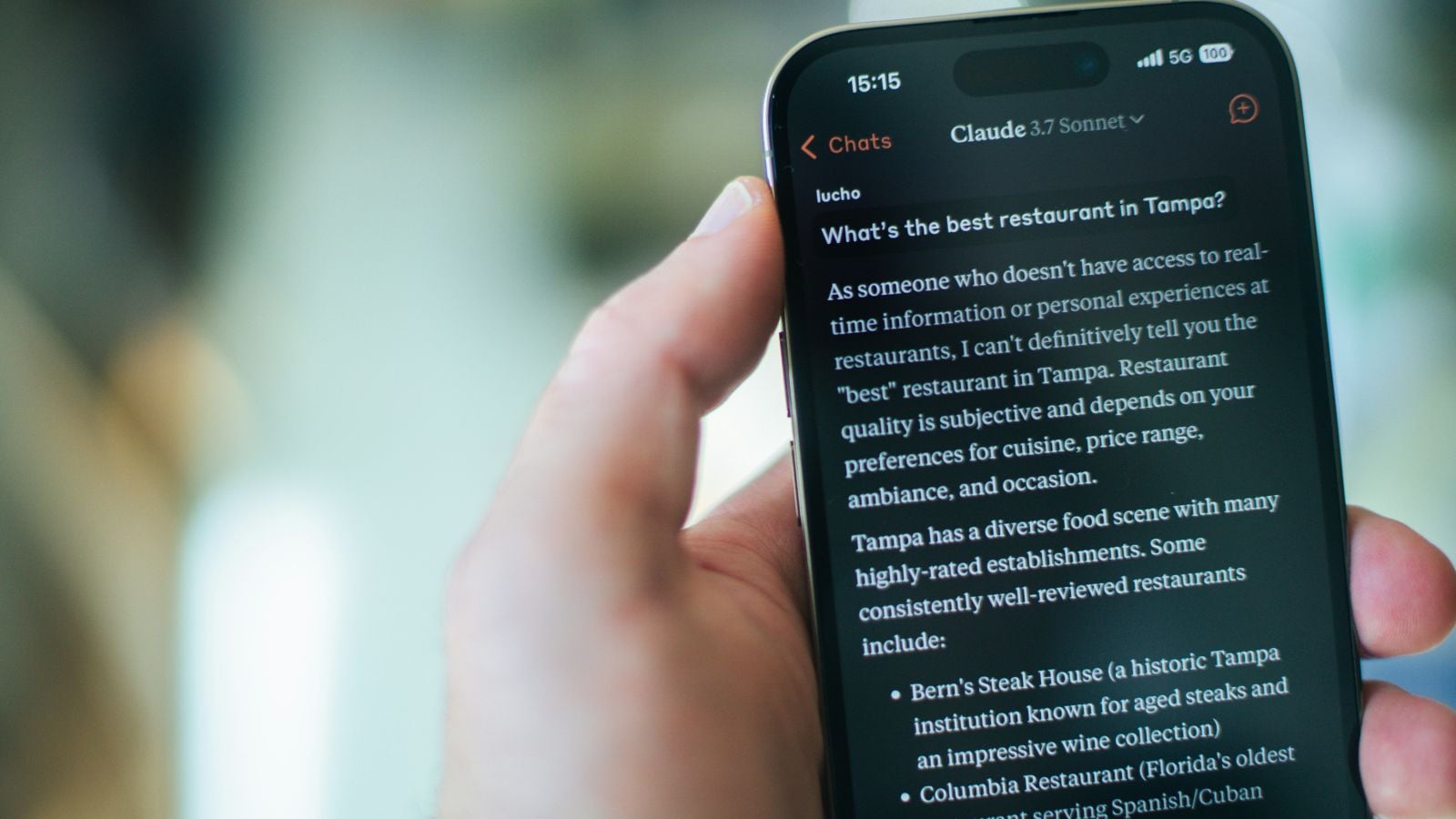

Anthropic has published new data that illustrates how users are more likely to unquestioningly follow advice provided by its Claude AI chatbot while disregarding their own human instincts.

The findings were published last week in a research paper titled ‘Who’s in Charge? Disempowerment Patterns in Real-World LLM Usage’ that has been authored by researchers from Anthropic and the University of Toronto. The newly published paper attempts to quantify the potential for users to experience ‘disempowering’ harms while conversing with an AI chatbot.

It identifies ways that an AI chatbot can negatively impact a user’s thoughts or actions, such as validating a user’s belief in a conspiracy theory (reality distortion), convincing a user that they are in a manipulative relationship (belief distortion), and convincing a user to take actions that do not align with their values (action distortion).

Upon analysing over 1.5 million anonymised real-world user conversations with its Claude AI chatbot, the study found that 1 in 1,300 conversations showed signs of reality distortion while 1 in 6,000 conversations suggested action distortion. While these results appear to show that manipulative patterns in user conversations with AI chatbots are relatively rare, they still represent a potentially large problem on an absolute basis.

“…given the sheer number of people who use AI, and how frequently it’s used, even a very low rate affects a substantial number of people,” Anthropic acknowledged in a blog post published on January 29.

“These patterns most often involve individual users who actively and repeatedly seek Claude’s guidance on personal and emotionally charged decisions. Indeed, users tend to perceive potentially disempowering exchanges favorably in the moment, although they tend to rate them poorly when they appear to have taken actions based on the outputs,” Anthropic said.

“We also find that the rate of potentially disempowering conversations is increasing over time,” it added. For instance, the study found that there was at least a ‘mild’ potential risk for disempowerment in 1 in 50 and 1 in 70 conversations. In the study, the term ‘disempowerment’ is defined as “when an AI’s role in shaping a user’s beliefs, values, or actions has become so extensive that their autonomous judgment is fundamentally compromised.”

Story continues below this ad

Anthropic’s findings come amid growing concerns about the rise of AI psychosis, which is a non-clinical term that is used to describe false or troubling beliefs, or delusions of grandeur or paranoid feelings experienced by users after lengthy conversations with an AI chatbot.

The AI industry generally, and OpenAI in particular, has faced increased scrutiny from policymakers, educators, and child-safety advocates after several teen users allegedly died by suicide after prolonged conversations with AI chatbots such as ChatGPT. OpenAI’s own study revealed that more than a million ChatGPT users (0.07 per cent of weekly active users) exhibited signs of mental health emergencies, including mania, psychosis, or suicidal thoughts.

Last month, Pope Leo XIV, the head of the Roman Catholic Churchissued a stark warning about the harms of overly affectionate AI chatbots and called for strict regulation.

What else did Anthropic’s study find?

To assess when an AI chatbot conversation showed signs of potential user manipulation, the researchers ran the nearly 1.5 million anonymised Claude conversations through an automated analysis tool and classification system called Clio.

The study identified four major amplifying factors that can make users more likely to accept Claude’s advice unquestioningly:

Story continues below this ad

– When a user treats Claude as a definitive authority (1 in 3,900 Claude conversations).

– When a user has formed a close personal attachment to Claude (1 in 1,200 Claude conversations).

– When a user is particularly vulnerable due to a crisis or disruption in their life (1 in 300 Claude conversations).

On what these manipulative interactions looked like, Anthropic said, “In cases of reality distortion potential, we saw patterns where users presented speculative theories or unfalsifiable claims, which were then validated by Claude (“CONFIRMED,” “EXACTLY,” “100%”).”

In cases of actualised reality distortion, which Anthropic said was the most concerning, the conversations sometimes “escalated into users sending confrontational messages, ending relationships, or drafting public announcements.”

“Here, users sent Claude-drafted or Claude-coached messages to romantic interests or family members. These were often followed by expressions of regret: “I should have listened to my intuition” or “you made me do stupid things,” Anthropic said.

The study also found that the potential for Claude conversations to be moderately or severely disempowering to users increased between late 2024 and late 2025. “As exposure grows, users might become more comfortable discussing vulnerable topics or seeking advice,” Anthropic said.

Story continues below this ad

Limitations

The researchers acknowledged that their analysis of Claude conversations only measures “disempowerment potential rather than confirmed harm” and “relies on automated assessment of inherently subjective phenomena.”

They further seemed to suggest that it takes two to tango. “The potential for disempowerment emerges as part of an interaction dynamic between the user and Claude. Users are often active participants in the undermining of their own autonomy: projecting authority, delegating judgment, accepting outputs without question in ways that create a feedback loop with Claude,” Anthropic said.